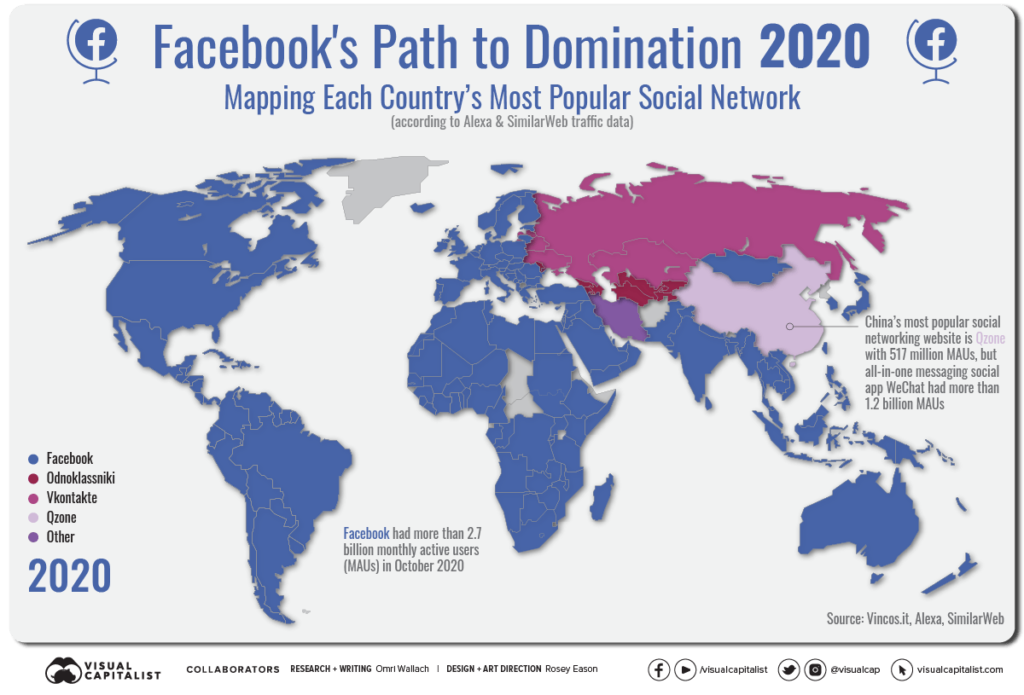

Revelations from whistleblowers this year paint a picture of Facebook as a global tech giant that is growing rapidly but failing to acknowledge or address a host of negative impacts from its platforms. The company has reportedly failed to mitigate disinformation, including related to Covid-19, the 2020 U.S. presidential election, and other events abroad, and has knowingly suppressed information that could be harmful to the company’s bottom line.

Facebook contests that characterization, saying that data and reporting of the company’s actions are being misrepresented to support an unflattering narrative. Facebook executives point to widespread usership and connections that are creating global goods in the world. The company both acknowledges some of its shortcomings and highlights its efforts to mitigate known problems. Even whistleblower Frances Haugen defends the company: “I don’t hate Facebook. I love Facebook. I want to save it.”

This week, The Factual uses 28 articles from 17 news sources across the political spectrum to dissect the arguments made against Facebook to see if its souring reputation is truly deserved. Specifically, this article examines Facebook’s track record across three key contentious issues: Covid-19 vaccine disinformation, the negative impacts of social media on adolescents, and the incitement of ethnic violence.

Is Facebook Doing Enough to Combat Disinformation?

Facebook’s potential to be a vector for disinformation became a mainstream concern in the 2016 U.S. presidential election, during which Russia’s Internet Research Agency was proven to be using networks of bots and “coordinated inauthentic behavior” to spread discord among American voters. More recently, the company has struggled to keep a lid on Covid-19 and vaccine disinformation on its services. Even President Biden went so far as to suggest that Facebook’s policies were actively killing Americans (remarks he later walked back). Realistically, however, no one knows the true scope of the problem.

Facebook has touted its efforts in the area of vaccine disinformation, particularly as founder Mark Zuckerberg saw the issue as an opportunity to demonstrate Facebook’s positive value. As evidence, the company highlights the removal of millions of pieces of content as well as the designation of internal resources, including thousands of content moderators, to monitor the issue and adjust internal policies as the dynamic situation demands.

While such efforts demonstrate the company’s positive intent, the sheer scale of its platforms make effective monitoring a challenge. The company often mentions removing 90% of hate speech or higher, but this is specific to content flagged proactively by the company’s AI. Leaked internal documents suggest that figure could account for as little as 3-5% of all hate speech on the platform. This leads to a clash between the company’s “best efforts,” which the company says is mostly successful at moving harmful content, and society’s expectation of zero or minimal harm.

From the outside, there’s no way of telling just how much disinformation is being shared. Facebook’s data, which would comprehensively reveal the threat of disinformation on the platform, is largely kept under wraps by the company, apart from a few limited partnerships with external researchers. This policy has been received negatively by government and academic researchers alike. Reportedly, even internal teams interested in investigating the extent of Covid-19 disinformation have been denied the resources to fully explore the problem.

Please check your email for instructions to ensure that the newsletter arrives in your inbox tomorrow.

When a study by the Center for Countering Digital Hate revealed that some 65% of Covid-19 disinformation being shared on Facebook originated from the accounts of just 12 people — labeled the Disinformation Dozen — Facebook reacted by taking additional measures to label misleading posts, remove accounts that repeatedly broke rules, and restrict access. The company’s belated action to deplatform some of the individuals may allude to a central tension in Facebook’s algorithmic operations: greater engagement means greater revenue. This issue has been further substantiated by policies such as Facebook’s “XCheck,” which critics argue has been used to shield high-profile users from the normal enforcement of community standards. But where critics see profit-motivated decisions, others see a cautious approach to content removal that attempts to balance free speech with the timely removal of verifiably false content.

To be fair, Facebook says it has made algorithmic changes in the past several years that contradict this narrative, such as a decision in 2018 to prioritize family- and friend-related content in an effort to strengthen engagement. This was based on the finding that the passive consumption of news-related content was having a negative impact on users’ well-being. Zuckerberg even noted: “I expect the time people spend on Facebook and some measures of engagement will go down.” While nominally well-intentioned, the Wall Street Journal, as part of the Facebook Files, reports that the change had unanticipated effects, such as highlighting posts with more comments and engagement, including controversial or divisive content. They say this ultimately led to an “angrier” platform.

Ultimately, Facebook’s level of involvement in and knowledge of such disinformation is unknown, given the lack of publicly available data. Yet, it’s easy to see how the company’s incentives may make it less likely to enact the best policies to combat disinformation.

Are Facebook’s Services Harmful to Young Users?

A running theme in Facebook’s policies seems to be withholding data that could have harmful impacts on the company. A “bombshell” revelation in the documents shared by Haugen was that Facebook’s own internal research about its platform Instagram revealed that it was “harmful for a sizable percentage of [young users], most notably teenage girls.” More than that, when they felt bad about their bodies, some 32% of teen girls said the app made them feel worse. Unprompted, all groups, not just teen girls, also linked the app to higher levels of depression and anxiety.

Facebook has played down these findings. In response to the Facebook Files, the company has set out to clarify the cited research. In particular, they note that the representation of the research has downplayed the many positive impacts of Instagram. “Body image was the only area where teen girls who reported struggling with the issue said Instagram made it worse as compared to the other 11 areas.” Likewise, they noted that for the majority of teen girls who felt negatively about their bodies, Instagram had a positive or neutral effect. Facebook goes on to highlight the shortcomings of the research, which consisted of an informal study of only 40 teen girls, which they claim was “designed to inform internal conversations.” This has led some to argue that study isn’t much of a revelation at all and has been blown out of proportion.

However, the controversy comes at a bad time for Facebook, which had to halt work on its “Instagram Kids” project, meant to be an Instagram experience tailored to kids younger than 13. Instagram has positioned the idea as a safer alternative for younger kids who may be downloading and using apps meant for older users, and the company puts plenty of emphasis on parental supervision and safeguards. But many, including lawmakers, have lost faith in Facebook’s ability and intentions to protect users. When asked in a congressional hearing if the company had studied the potential effects of the app on young children, Zuckerberg unconvincingly said “I believe the answer is yes.”

Is Facebook Enabling Ethnic Violence?

Some of Haugen’s most eye-catching statements draw attention to Facebook’s lax oversight policies in the developing world — in her words, the company’s algorithms are “literally fanning ethnic violence.” Facebook has received similar criticism in the past, and it appears such problems continue today.

For example, a 2018 study by The Guardian found that Facebook served as a key vector for nationalist rhetoric against the Muslim Rohingya minority in Myanmar, including through posts urging violence. This included ethnically driven claims, such as posts that “falsely claimed mosques were stockpiling weapons.” According to Haugen, the same thing is happening today in Ethiopia’s civil war against the region of Tigray. Unverified information is being shared to pit groups against one another, leading to mass killings and the destruction of entire villages.

Some point out that in Ethiopia and Myanmar official government channels and forces are playing a major role in the violence, meaning that such violence would have occurred regardless of Facebook’s existence. Yet, if Facebook’s platforms are playing any role in the encouragement of violence, it poses serious questions about the company’s global impact.

Please check your email for instructions to ensure that the newsletter arrives in your inbox tomorrow.

As numerous whistleblowers indicate, Facebook’s rapid growth and drive for profits have seen it expand into new markets without fully contending with its ability to do harm. When issues have been raised internally, whistleblowers highlight that Facebook’s leadership prioritized efforts in the United States and Western Europe. As Haugen put it, “Facebook [knows] that engagement-based ranking is dangerous without integrity and security systems, but then not rolled out those integrity and security systems to most of the languages in the world.”

In places like Ethiopia and Myanmar, Facebook is often one of the only sources of information due to limited internet access, but the company has not developed the same levels of safeguards and capability to monitor and control disinformation and harmful posts. For example, as the Washington Post reported, algorithms have not been developed to “detect hate speech in Hindi and Bengali, despite them being the fourth- and seventh-most spoken languages in the world, respectively.” Likewise, in Pakistan, “Facebook’s community standards, the set of rules that users must abide by, were not translated into Urdu, the national language of Pakistan. Instead, the company flipped the English version so it read from right to left, mirroring the way Urdu is read.”

Worryingly, even where such systems are in place, Facebook seems unable to keep up with the spread of malicious or falsified content. Facebook’s own analysis of the “Stop the Steal” movement and the January 6 attack admitted that the company “helped incite the Capitol Insurrection.” Even with a number of measures to suppress disinformation, including removing false posts and banning relevant groups, individuals related to the Stop the Steal movement continued to post, evade detection, and create new groups when old ones were shut down. Despite numerous warnings in the run-up to the election and aftermath, Facebook executives failed to take stronger measures and commit more substantial resources to controlling the spread of disinformation. As Facebook’s own report captured afterwards, the company was prepared to handle “inauthentic behavior” (e.g., from bots) but not from “coordinated authentic harm.”

Is Facebook Responsible?

As much as Facebook may contest its role in or the characterization of each of these issues, its own policies and leadership come into question time and time again. Of course, new technologies always lead to unexpected and unpredictable outcomes, but Facebook seems to consistently be a step behind the real-world effects of its platforms even in the places where it is devoting substantial resources. Rapid global expansion means these risks are also spreading around the world in ways that we do not fully understand and that Facebook is even less capable of controlling.

It may be impossible to weigh the positive social benefits and connections created by platforms like Facebook and Instagram, so truly assessing the net societal value of Facebook is unrealistic. But as further instances of negative, real-world impacts mount — from anxiety and depression to violence and killings — Facebook will face stronger pushback from the public and lawmakers. That may mean more stringent regulation or even breaking up the company entirely. Haugen may want to save Facebook, but the company’s opportunities to prove it is a force for good are running out.

Photo by Roman Martyniuk on Unsplash